Refract

In the past few years, articles revealed many incidents of malicious, retaliatory abuse of deepfake AI targeting celebrities and common folks alike. With the goal of protecting identity theft, we created Refract — aiming to cloak selfies with indiscernible pixels that disrupt deepfake models.

We first tried introducing noise, which is random pixel-level distortion, along with trying to distort the face directly. results were not effective — random pixel level distortion is easily factored in the training ai models to ignore, and distorting the face directly did not maintain visual similarity to the original photo.

Our approach focuses on attacking the training process of deepfake models. Deepfake models typically operate by extracting facial feature embeddings – the unique characteristics that define a person's visual identity, such as face shape or eye size. Deepfake models use embeddings to replicate a person's likeness to generate convincing imitations. By targeting feature embeddings, we developed a strategy to shield an individual's visual identity from being captured.

We began by studying Glaze's research paper for inspiration on how to build our own model. The Glaze software protects artist’s works by adding a few pixels encoded with the embeddings of a completely different art style. Our novel approach is similar — our model selects a different face from our database and “cloaks” the original picture with a few pixels of new facial features embeddings. We fine-tuned the model to make the source photo look like the target photo, without introducing human noticeable changes. After multiple iterations, we successfully optimized the parameters in our model to achieve desired results.

We generated 500 faces from thispersondoesnotexist.com to train our model instead of scraping for people’s faces online without proper consent — which would’ve contradicted the purpose of this project 😅 !!

Together, we wrote a Python script to scrape thispersondoesnotexist.com faces into our database, and another Python script with Hugging Face to get feature embeddings from a picture of a face. Then, we wrote the algorithm that searched our dataset for a target face with the most similar feature embeddings to the original face.

The last part of our model we implemented was to produce a new image that minimized visual difference, using the L2 norm metric, and maximized feature embedding differences, using the LPIPS metric.

After working on the core implementation together, we delegated the rest of the work — model fine tuning, and frontend & design.

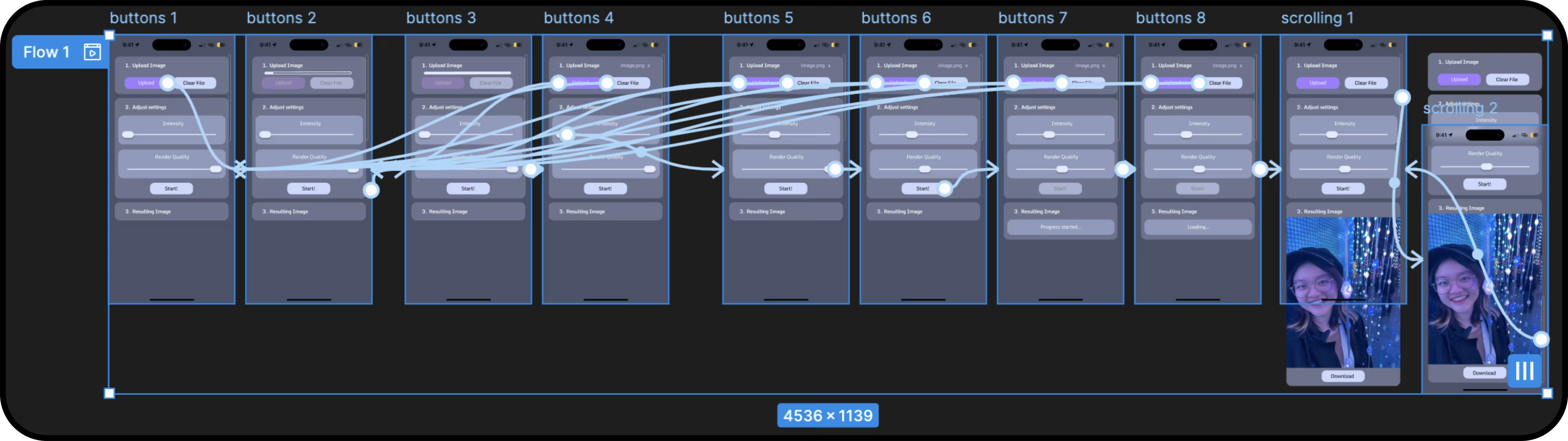

I worked with another member on branding — colors, typography, personality, etc. We designed a simple, consistent style in Figma to prototype. Then, we implemented the frontend, working with another team member in charge of DevOps to connect the backend. This was the first time I coded in Typescript, and learnt a lot of React.js as well!

Cloaked’s similarity to Target: 75.67%

Cloaked’s similarity to Original: 29.57%

Cloaked images are 2.56 times more effective at fooling deepfakes!

Original

Cloaked

The output image is almost completely identical to the source image in the human eye, but actually very different in terms of facial features calculated from the machine, which used the 500 images ran on our model, and measured the Euclidean distance of the original and cloaked images. Cloaked images are approx. 2.56X more similar to the target than the original in feature embedding space!

Most of us have likely posted on social media before and thus we are all prone to web-scraping activities of scammers where they, unbeknownst to you, steal your photos for deepfakes and other forms of identity theft. With Refract, we easily prevent all of these kinds of attacks.

Hit me up!